In this issue we discuss the Fintech Marketplace, the rise and risks of AI in financial services, and the upcoming events over the next few months.

Following the success of our first insiders meeting in Dubai on the 30th of July, the Impact Team are looking forward to the Finbridge Global platform launching in the Gulf region with the first Innovation board being held in September.

Published by The Impact Team and Finbridge Global

August 2025

The fintech industry is booming, fuelled by digital transformation, innovative financial solutions, and supportive regulatory environments like those in the UAEs Dubai International Financial Centre (DIFC) and Abu Dhabi Global Market (ADGM). Fintechs offer groundbreaking tools from payment processing to blockchain and AI-driven analytics but breaking into the enterprise market, particularly with banks and financial institutions, is no easy feat. Large enterprises present a gauntlet of challenges that can derail even the most promising fintechs. This white paper flips the narrative by highlighting common pitfalls fintechs encounter when selling to large enterprise clients and how to avoid them. Drawing on insights from Finbridge Global (www.finbridgeglobal.com), we outline the missteps that can sabotage partnerships and offer practical solutions to foster successful collaborations.

Large enterprises, especially banks, are notorious for their complex, drawn-out sales cycles. Fintechs can easily lose momentum by failing to navigate this labyrinth effectively.

· Ignoring Stakeholders: Enterprises have layered structures with procurement teams, IT departments, compliance officers, and C-suite executives, each with distinct priorities. Failing to engage all stakeholders early can stall progress.

· Underestimating Due Diligence: Banks rigorous evaluations of technology, security, and compliance can take months. Fintechs unprepared for this scrutiny risk rejection.

· Overcommitting to PoCs: Proofs of Concept are resource intensive, and agreeing to extensive pilots without clear contract prospects can drain limited resources.

Impact: Prolonged sales cycles exhaust fintechs cash flow and distract from innovation, leading to missed opportunities.

Solution: Platforms like Finbridge Global streamline the process by connecting fintechs with pre-vetted enterprise clients, reducing time spent identifying decision-makers. The platform’s centralized hub showcases solutions, enabling efficient evaluations and faster partnerships.

The financial sectors regulatory landscape, particularly in the UAE, is a minefield. Fintechs that misstep here can quickly lose enterprise trust.

· Lacking Regulatory Expertise: Many fintechs don’t have the in-house knowledge to navigate AML, KYC, or UAEs data protection laws (e.g., Federal Decree Law No. 45/2021).

· Unable to Scale Compliance: Enterprises demand compliance frameworks that scale globally, which smaller fintechs may not be equipped to provide.

· Overlooking Cross-Border Rules: Serving multinational banks means complying with local and international regulations, like GDPR, which can overwhelm unprepared fintechs.

Impact: Regulatory missteps lead to delays, rejections, or reputational damage, with compliance costs straining budgets.

Solution: Finbridge Global offers regulatory guidance tailored to UAE and global markets, partnering with experts to align fintech offerings with enterprise standards, ensuring smoother onboarding.

Enterprises prioritize stability and reliability, and fintechs often struggle to prove they’re not a risky bet.

· No Track Record: New fintechs lack the case studies or references that enterprises trust, making them seem unproven.

· Underestimating Risk Aversion: Banks avoid partners without robust security or a proven track record, viewing them as liabilities.

· Cultural Clashes: Fintechs agile, startup mindset can conflict with enterprises risk-averse, process-driven culture, hindering collaboration. Impact: Without trust, enterprises opt for legacy vendors, sidelining innovative fintechs.

Impact: Lacking credibility and a reputation can often lead to fintechs being overlooked, rejected, or having to spend resources building their security and improving their risk posture instead of engaging with client projects or developing their product.

Solution: Finbridge Global curates a network of vetted fintechs, providing enterprises with detailed profiles, case studies, and metrics. The platforms maturity assessments help match fintechs to enterprise needs, while facilitated introductions ensure cultural alignment.

Integrating fintech solutions into enterprise IT systems is a technical tightrope that many fintechs fail to walk.

• Ignoring Legacy Systems: Many banks rely on outdated core systems incompatible with modern fintech platforms, leading to integration nightmares.

• Overlooking Scalability: Enterprises need solutions that handle high transaction volumes globally, and fintechs that cant scale risk rejection.

• Skimping on Security: Failing to meet standards like ISO 27001 or PCI DSS can erode trust and halt partnerships.

Impact: Technical mismatches prolong implementation or lead to rejection, while customization costs drain resources.

Solution: Finbridge Global provides technical specifications and integration roadmaps, connecting fintechs with specialists to navigate legacy systems and ensure security compliance. Partnerships, like with Drata, offer discounted ISO certifications to bolster credibility.

Limited resources and misaligned goals can be the final nails in the coffin for fintech enterprise partnerships.

• Underfunding Sales Efforts: Fintechs with lean budgets struggle to build sales teams or execute marketing campaigns, limiting their reach.

• Ignoring Regional Nuances: Fintechs unfamiliar with UAEs business practices or regulations face barriers to market entry.

• Misaligned Value Propositions: Failing to articulate clear benefits or meet enterprises customization and long-term ROI expectations stalls deals.

Impact: Resource constraints and misaligned expectations prevent fintechs from competing effectively, leading to lost contracts.

Solution: Finbridge Global reduces client acquisition costs by connecting fintechs with targeted enterprise networks and providing marketing support. The platform helps refine value propositions through market insights and workshops, aligning fintechs with enterprise priorities.

Selling to large enterprises is a high-stakes game where fintechs can easily stumble. From endless sales cycles to regulatory pitfalls, trust gaps, technical challenges, and resource constraints, the path to partnership is fraught with ways to stop you short of a deal. Finbridge Global (www.finbridgeglobal.com) flips the script by addressing these challenges head-on. As an AI-powered platform, it connects fintechs with enterprises, provides regulatory and technical support, and builds trust through vetted networks and expert guidance. As Finbridge Global CEO Barbara Gottardi says, We don’t believe the process should restart every time you change teams, nor should institutions re-ask the same questions in different formats. Fintechs should focus on building resilient products and maintaining certifications, not wasting time on repetitive tasks. We’ve built this platform with the industry, for the industry. By joining Finbridge Global, fintechs and enterprises in the UAE and beyond can avoid these pitfalls, fostering partnerships that drive financial innovation.

Finbridge Global bridges the gap between fintechs and enterprise clients through a curated network, regulatory guidance, technical support, and market insights. Visit www.finbridgeglobal.com to join the mission to transform financial services.

The Impact Team, with offices in London, New York, Hong Kong, and Dubai, is a digital transformation consultancy driving customer-centric solutions and cybersecurity. Committed to ESG friendly innovation, they empower organizations to thrive in the digital age.

Published by The Impact Team

August 2025

Introduction

The financial services industry is no stranger to disruption, but the rise of artificial intelligence (AI), particularly generative AI, has sparked a frenzy of excitement and apprehension. Headlines tout AI as a game-changer, promising efficiency gains and cost reductions that could replace entire swaths of white-collar work. Yet, as with any new hire, the new guy comes with baggage. Generative AI, powered by large language models (LLMs),

introduces risks that threaten to undermine its transformative potential, especially in a highly regulated sector like finance. From data leakage to auditability challenges and looming regulatory oversight, financial institutions must tread carefully. This article explores the inherent weaknesses of generative AI in financial services, with a particular focus on data leakage, auditability, and regulatory challenges. Approaching AI like a stress test, we break down the risks to help institutions avoid costly missteps. By examining these pitfalls and offering practical solutions, we aim to guide financial firms toward safer, more effective AI adoption.

1 Data Leakage: The Silent Threat

Generative AIs ability to process and generate human-like responses relies on vast datasets, but this strength is also its Achilles heel. Data leakage where sensitive information fed into an AI system is inadvertently exposed poses a significant risk in financial services, where confidentiality is paramount.

1.1 How Leakage Happens

Data leakage occurs when sensitive information, such as customer financial data, employee salaries, or proprietary strategies, is extracted through skilful or even accidental prompt engineering. For example, an HR manager might upload salary data to an AI for analysis, only for a subsequent user to prompt, “Generate a list of remuneration packages for all managers” and retrieve that sensitive information. Unlike traditional systems with

strict access controls, generative AI models often lack robust mechanisms to prevent such disclosures. Once data is ingested, it should be considered potentially public, as skilled prompt hackers can exploit vulnerabilities to extract it.

1.2 Impact on Financial Institutions

In finance, where trust is the currency of client relationships, data leakage can be catastrophic. A breach exposing customer financial details or internal strategies could lead to regulatory fines, lawsuits, and reputational damage. For instance, under the UAEs Federal Decree-Law No. 45/2021 on Personal Data Protection, mishandling personal data can result in severe penalties. Globally, regulations like GDPR impose fines up to 20 million or 4% of annual turnover for data breaches, making leakage a costly misstep.

1.3 Mitigating the Risk

Blanket bans on AI use have proven ineffective, as employees and executives, driven by competitive pressures, bypass restrictions to leverage AIs efficiency gains. Instead, financial institutions are turning to third-party controls like tokenization and anonymization to sanitize data before it enters AI systems. These techniques replace sensitive information with non-identifiable tokens, reducing the risk of exposure. Additionally, guardrails predefined rules limiting what AI can output can block attempts to extract sensitive data. For example, a guardrail might prevent the AI from responding to prompts requesting employee or customer data unless explicitly authorized. Regular training on secure prompt crafting also helps employees avoid inadvertently leaking data.

2 Auditability: Tracking the Black Box

Generative AIs decision-making process is often opaque, raising concerns about auditability in a sector where transparency is non-negotiable. Financial institutions rely on clear audit trails to justify decisions, comply with regulations, and defend against disputes, but AIs black box nature complicates this.

2.1 The Auditability Challenge

When AI assists in decisions such as credit approvals, fraud detection, or customer segmentation it’s critical to understand the basis for its outputs. Unlike traditional rule-based systems, generative AI models like LLMs don’t provide a clear log of their reasoning. If an AI recommends denying a loan, for instance, regulators or auditors may demand an explanation of the factors considered, but the models complex neural networks make it difficult to trace the decision path. This lack of transparency can lead to compliance failures or legal challenges, particularly in jurisdictions with strict oversight like the UAEs Central Bank or the U.S.s SEC.

2.2 Impact on Compliance

Auditability gaps can cripple compliance efforts. Regulations such as the Basel III framework or the UAEs Anti-Money Laundering (AML) guidelines require institutions to document decision-making processes thoroughly. Without an audit trail, firms risk regulatory penalties or operational inefficiencies when disputes arise. For example, if a customer contests a loan denial, the institution must provide evidence of fair and compliant decision-making evidence that AI may not readily supply.

2.3 Building Robust Audit Trails

To address auditability, financial institutions are adopting AI systems with enhanced logging capabilities. These systems record inputs, outputs, and contextual metadata, creating a partial audit trail. Some firms are exploring explainable AI (XAI) tools, which provide simplified explanations of model decisions, though these are still evolving. Third-party platforms can also impose structured workflows, ensuring prompts and outputs are logged for review. For instance, integrating AI with existing compliance systems can capture decision rationales, aligning with regulatory expectations. Training staff to document AI interactions manually, while imperfect, can also bridge the gap until more advanced solutions emerge.

As AI adoption accelerates, regulators are sharpening their focus on its risks, creating a complex compliance landscape for financial institutions. The financial sectors stringent regulatory environment demands that AI

systems align with existing and emerging standards, but many firms are unprepared for this scrutiny.

3.1 Evolving Regulatory Landscape

Regulators worldwide are grappling with AIs implications. In the UAE, the Central Bank and the Securities and Commodities Authority are beginning to incorporate AI- specific guidelines into their frameworks, emphasizing data protection, transparency, and accountability. Globally, the EUs Artificial Intelligence Act, set to take effect in 2026, classifies high-risk AI systems (including those in finance) and imposes strict requirements for risk management, transparency, and human oversight. Similarly, the U.S. is exploring AI regulations through agencies like the SEC and CFPB, focusing on bias, fairness, and data security.

3.2 Challenges for Financial Institutions

Compliance with these regulations requires significant investment in governance frameworks, which many firms lack. For instance, ensuring AI systems are free from bias a key regulatory concern demands rigorous testing and monitoring, yet LLMs can inadvertently perpetuate biases present in their training data. Additionally, meeting transparency requirements, such as disclosing AIs role in decision-making, clashes with the technology’s opaque nature. Smaller fintechs, in particular, may struggle to afford the legal and compliance expertise needed to navigate this landscape, risking regulatory penalties or exclusion from enterprise partnerships.

3.3 Preparing for Compliance

Proactive measures can help institutions stay ahead of regulatory demands. First, adopting AI governance frameworks that align with standards like ISO/IEC 42001 (AI management systems) can demonstrate compliance readiness. Second, partnering with third-party providers offering regulatory-compliant AI solutions can reduce the burden. For example, platforms like Finbridge Global (www.finbridgeglobal.com) provide regulatory guidance tailored to financial services, helping firms align AI use with local and global standards. Finally, regular audits of AI systems for bias, security, and compliance can pre-empt regulatory issues, ensuring firms remain on the right side of the law.

Beyond data leakage, auditability, and regulation, generative AI introduces other challenges. Training data poisoning, where malicious actors manipulate input data to skew outputs, threatens model reliability. Prompt hacking, a growing field, exploits vulnerabilities in AI interfaces to extract sensitive information or bypass restrictions. Hallucinations confidently incorrect outputs can mislead decision-makers, while reputational risks arise when AI generates inappropriate or offensive content, as seen in past incidents where models produced harmful propaganda.

Generative AI holds immense promise for financial services, but its risks data leakage, auditability gaps, and regulatory challenges require careful management. Blanket bans have failed, and running internal AI instances without robust controls invites disaster. Instead, financial institutions must adopt a layered approach: tokenization and guardrails to prevent data leakage, enhanced logging and explainable AI for auditability, and proactive

governance to meet regulatory demands. By stress-testing AI systems and partnering with platforms like Finbridge Global, firms can harness AIs potential while avoiding the pitfalls that could lose the enterprise in days. The new guy may have issues, but with the right controls, he can still be a star.

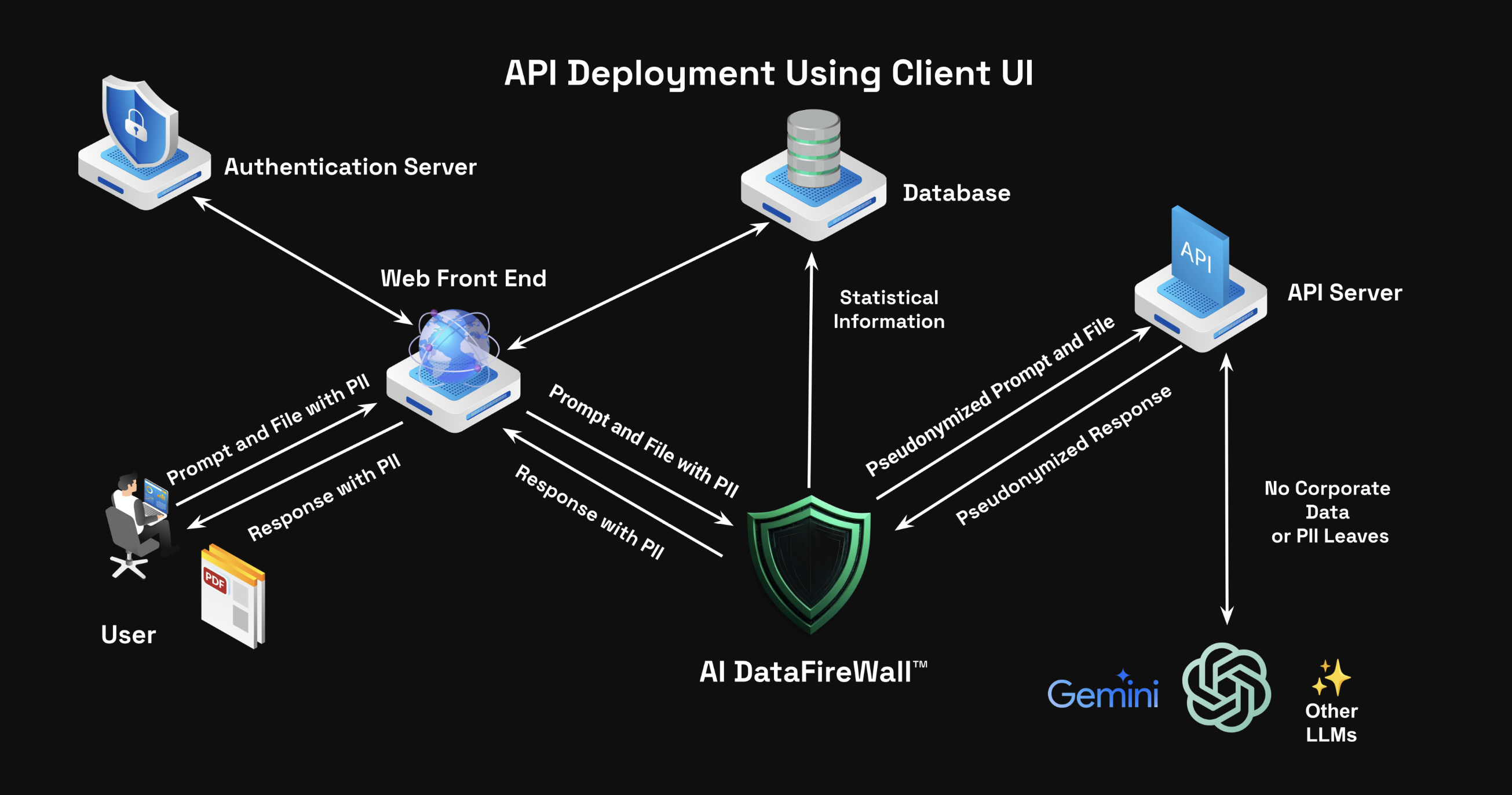

We have been working with our friends at contextul for a while now and we're thrilled to share that Impact Team Insiders can now gain exclusive access to the AI Firewall service as part of the Europe and Gulf regional trial.

What is it?

Its's a service that sits in-between your employee and the array of LLMs. It scans the LLM query and associated documents that are sent to it be the employee, identifies all potential cases of personal or company confidential information, replaces this data with dummy data and then send the query onward to the public LLM. When the response is received, it reverses the dummy data out and replaces it with the original data. Hence, ensuring the employee doesn't inadvertently introduce a data breach scenario..

Why use it?

LLMs are increasing in their number and the rate of introduction of new LLMs is only speeding up. The efficiency gains by adopting these tools is obvious for all to see and corporate enterprises are adopting one of possibly three treatment strategies as the world grapples with how best to use them safely.

1. Ban them completely (very safe, but denies your employees access to any potential efficiency gains)

2. Allow public access to them, but insist that the employee adheres to a privacy and usage policy (virtually impossible to govern)

3. House your own LLM within a corporate walled garden (very expensive and always out of date)

Trump’s UAE visit unveiled "Stargate," a massive US-UAE AI campus, signaling a shift in Gulf strategy from oil to AI dominance. Backed by G42 and Nvidia, it aims to counter China’s tech rise. The UAE and Saudi Arabia invest heavily in AI infrastructure, but face challenges in attracting top talent.

Barq, Saudi Arabia’s digital payment app, gained 7M users from 150 nationalities in its first year, issuing 6.5M cards and processing 500M transactions worth SAR 73B. Licensed by SAMA, it supports cross-border payments and Vision 2030’s cashless economy goals, driving financial inclusion and digital tourism.

Money20/20 Middle East in Riyadh connects global fintech leaders with Saudi decision-makers, driving innovation in a $1T economy. Focused on Vision 2030’s digital transformation, it unites banks, tech giants, startups, and regulators to shape strategies, forge partnerships, and close deals.

Finbridge Global, an AI-driven fintech marketplace, has launched in the Gulf region in partnership with The Impact Team, a digital transformation specialist. The platform connects banks, investors, and fintechs, streamlining partnerships in the GCC’s booming fintech ecosystem.

The Gulf is a fintech hotspot, with the market projected to surpass USD 3 billion by 2025. Governments in the UAE, Saudi Arabia, and Bahrain are driving digital-first ecosystems through:

The Impact Team brings localized expertise, compliance knowledge, and community-driven Innovation Boards to foster collaboration. Together, they aim to transform how financial institutions and startups connect, accelerating innovation across the GCC.

.jpeg)